I read Occam’s Razor for quite a while now and I really like Avinash’s style and insights. I thought it would be nice to reread most of his stuff and as a nice extra, I will post my notes on here.

I oriented each section by the section defined in his overview of all articles.

Enjoy!

The 10 / 90 Rule for Magnificent Web Analytics Success

- There is lots of data but no insights

- Rule: 10% in tools and 90% people/analysts

- may seem over the top but

- med-large websites are complex

- reports aren’t meaningful by default

- tools have to be understood

- there is more than clickstream to analytics

- If you don’t follow the 10 / 90 Rule

- Get GA account

- Track parallel to expensive solution

- Find a metrics multiplier, so you can compare GA to old data

- Cancel your contract and hire an smart analyst which will probably deliver more insights for less money

Trinity: A Mindset & Strategic Approach

- The goal is to generate actionable insights

- Components:

- Behavior analysis: clickstream data analysis

- Outcomes analysis: Revenue, conversion rates, Why does your website exist?

- Experience: Customer satisfaction, testing, usability, voice of customer

- Helps you understand what customer experience on your site, so that you can help influence their behavior

The Promise & Challenge of Behavior Targeting (& Two Prerequisites)

- We have so much behavior data but you get the same content regardless whether you are here to buy or get support

- There are BT systems but you have still think about the input

- You have to first understand your customers good enough to create suitable content

- Test content ideas first to learn what works and as evidence for HiPPOs

Six Rules For Creating A Data Driven Boss!

- Paradox: The bigger the organization the less likely it is data driven in spite of spending lots of money on tools

- It is possible to achieve this but you have to actually want to do and fight for it

- 1. Get over yourself: Learn how to communicate with your boss and try to solve his problems

- 2. Embrace incompleteness: Data is messy, web data is really messy but still better than completely faith based initiatives.

- 3. Give 10% extra: Don’t just report data, look at it. Give him insights he didn’t asked for. Make recommendations and explain what’s broken.

- 4. Become a marketer: Great analysts are customer people. Marketer as internal customer (like account plannner)

- 5. Don’t business in the service of data: Data should provide insights not just more data. Ask: how many decision have been made based on data that have added value to the revenue?

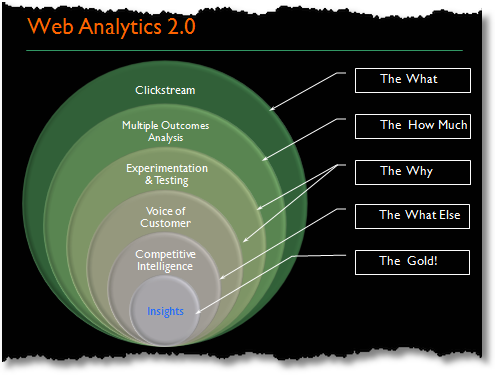

- 6. Adapt a Web Analytics 2.0 mindset:

Lack Management Support or Buy-in? Embarrass Them!

- HiPPOs may be don’t listen to you but they better listen to customers & competitors

- 1. Start testing

- 2. Capture Voice of Customer: Surveys, Usability tests, etc.: Let the customer do the talk

- 3. Benchmark against the competition, e.g. use Fireflick

- 4. Use Competitive Intelligence

- 5. Start with a small website

- 6. Ask outsiders for help

How To Excite People About Web Analytics: Five Tips.

- 1. Give them answers

- 2. Talk in outcomes / measure impact

- 3. Find people with low hanging fruit and make them a hero

- 4. Use customers & competitors

- 5. Make Web Analytics fun: Hold contests, hold internal conferences, hold office hours

Redefining Innovation: Incremental, w/ Side Effects & Transformational

- 1. Incremental innovation, e.g. Kaizen

- 2. Incremental innovation with side effect, e.g. iPod or Adsense

- 3. Transformational innovation, e.g. invention of the wheel

- Web analytics can’t probably create 3

- Clickstream alone is also not enough for 1.

- generally the more the better (Web analytics 2.0)

Six Tips For Improving High Bounce Rate / Low Conversion Web Pages

- Purpose gap between customer intent and page

- 1. Learn about traffic sources / keywords(!)

- 2. Do you push your customers against their intent? Identify jobs of each page and focus on your call to actions.

- 3. Ask your customer what they are looking for

- 4. Get insights from site overlays

- 5. Testing!

- 6. Get first impressions from people, e.g. fivesecondtest

Online Marketing Still A Faith Based Initiative. Why? What’s The Fix?

- Faith based initiatives like TV, magazines, etc.

- Online marketing gives us useable data

- and allows us to test easily

- The web is quite old yet it is not in the blood of executives

- Old mental: shout marketing, instead of new inbound marketing

- Lousy standards for accountability

- Let the customers speak

- Benchmark against competition

Win With Web Metrics: Ensure A Clear Line Of Sight To Net Income!

- Focus on the bottom line, i.e. profits

- 1. Identify your Macro Conversion

- 2. Report revenue

- 3. Identify your Micro Conversions

- 4. Compute the economic value

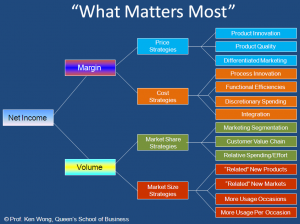

- Net income = Unit Margins * Unit Volumes

- Unit Margins = Price – Cost

- Unit Volumes = Market Share * Market Size

- Because Net Income is the goal, you have to measure Price, Cost, Market Share or Market Size

- Which metrics help doing that? And if not, why do you track/report this metric?

- They also depend on the strategies or more general goals of the organization

— let your “boss” decide what matters most to him/organization

— let your “boss” decide what matters most to him/organization- identify clear metrics / KPIs for each used strategy

- use the web analytics measurement framework as a reporting foundation (more to this later)

- find actionable insights with segmented analysis

Digital Marketing and Measurement Model

- Marketing with measuring helps you to identify success and failure

- Digital Marketing & Measurment Model

- Set business objectives (should be DUMB)

- Doable

- Understandable

- Manageable

- Beneficial

- Identify goals for each objective

- Get KPIs for each goal

- Set targets for each KPI

- Identify segments of people / outcomes / behavior to understand why things succeeded or failed

- What scope has the model to cover?

- Acquisition: How do people come on your site? Why? How should it be?

- Behavior: What should people do on your site? What are the actions they should take? How do you influence their behavior?

- Outcomes: What are the goals? (see previous summary)

11 Digital Marketing “Crimes Against Humanity”

- Not spending 15% of your marketing budget on new stuff

- Not having a fast, functional, mobile-friendly website

- Use of Flash

- Campaigns that lead to nowhere

- Not having a vibrant, engaging blog

- “Shouting” on Twitter / Facebook

- Buying links is your SEO strategy

- Not following the 10/90 rule

- Not using the Web Analytics Measurement Model (previous summary)

- Using lame metrics: Impressions, Page Views, etc.

- Not centering your digital existence on Economic Value